Despite the impressive capabilities of LLMs, they have faced a significant limitation: data isolation. Enter the Model Context Protocol (MCP), a groundbreaking open standard that promises to revolutionize how AI interacts with external systems.

Introduced by Anthropic in late November 2024, Model Context Protocol (MCP) is an open standard designed to unify communication between LLMs and external data sources and tools. MCP addresses the critical challenge of data silos that have previously prevented AI models from reaching their full potential. For example, without MCP, we would have to manually copy and paste the relevant context for the AI to analyze. However, with MCP, LLMs can automatically call MCP services to retrieve the necessary context and generate output accordingly.

Those familiar with the function calling feature of LLMs might now be wondering: how is MCP different from function calling? While Function Calling allows AI models to invoke functions, MCP goes further by establishing a standardized protocol for seamless interaction between LLMs and various external services. An MCP service goes beyond a simple function call — it can perform a series of actions to fulfill the requests made by the LLMs. In the meantime, it is easier to incorporate all different kinds of MCP services into AI applications as they are implemented in a standard protocol. With MCP, integrating external data, the internet, and development tools into AI applications becomes possible and promising.

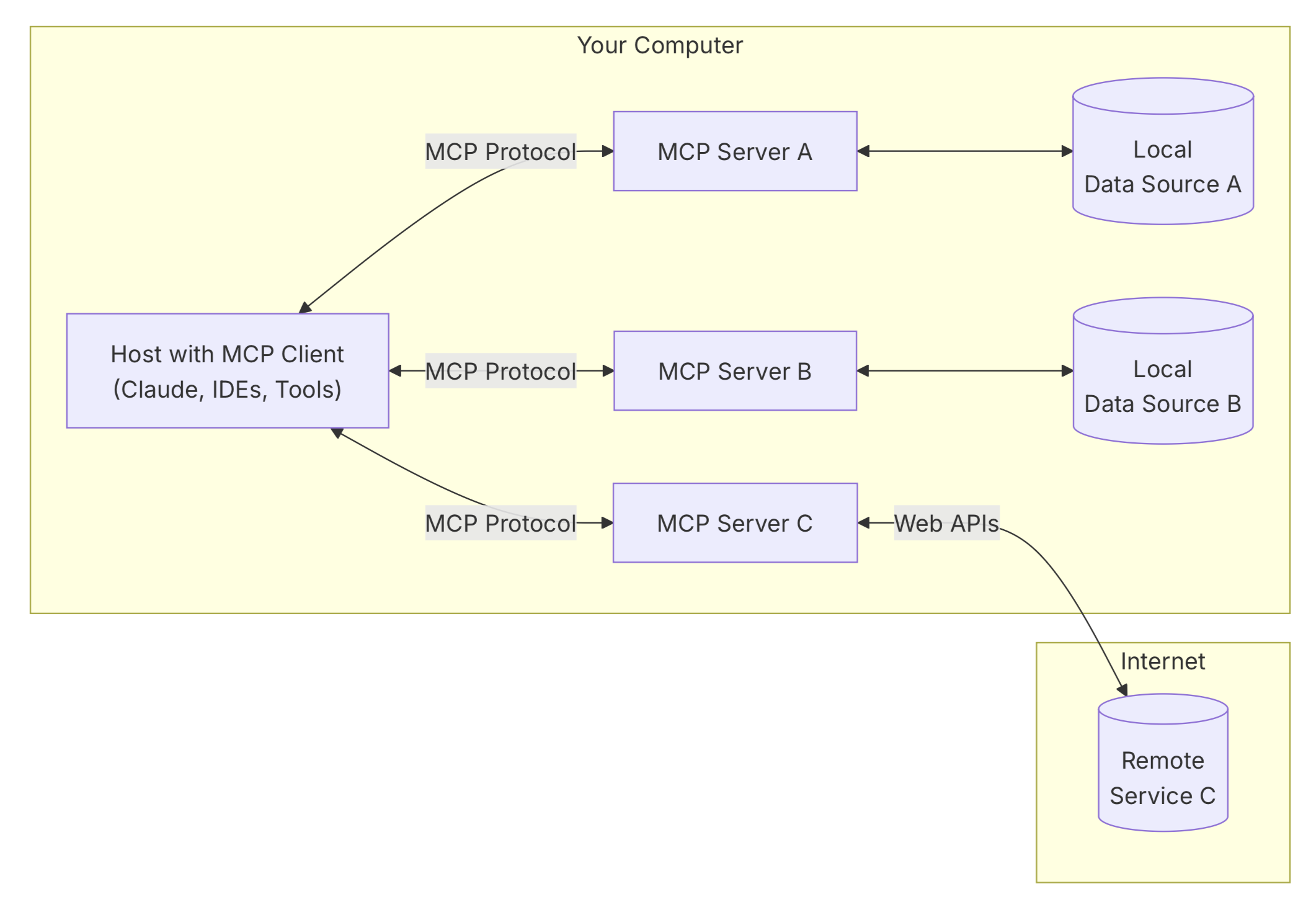

MCP consists of three core components:

MCP servers can securely access both local resources (such as files and databases on the user’s computer) and remote resources (through APIs and online services), allowing LLMs to work with a wide range of data sources. The typical workflow begins when the client constructs a request message based on its needs and sends it to the server. Upon receiving the request, the MCP server parses its content and performs the corresponding operation (such as querying a database or reading a file). Then, the server packages the result into a response message and sends it back to the client. After the task is completed, the client can either actively close the connection or wait for the server to close it after a timeout.

An illustration of MCP from the official documentation

The key to enabling LLMs with diverse capabilities through MCP lies in the variety of MCP servers. MCP servers expose three key capabilities: resources, which represent readable data like file contents or API outputs; tools, which are callable functions that the LLM can execute with user consent; and prompts, which are pre-defined templates that guide users in performing specific tasks. This design allows LLMs to go beyond static input-output interactions and operate within a much richer, context-aware environment. Since the launch of MCP, a vibrant community has emerged around developing a wide variety of MCP servers — including our MCP Hub at NetMind. You can easily customize your AI application with a selection of MCP servers from our platform.

To showcase the true capabilities of the MCP, our team recorded a demo using NetMind’s API services within Cline. In this video, we demonstrate how an AI can autonomously build a Snake game written in HTML by leveraging MCP tools. Throughout the demo, Cline uses MCP services such as file reading and code editing, collaborating with the user in an iterative workflow. The task is completed smoothly — and the final result is surprisingly impressive. Watch our demo video here.

Just as thirty years ago, when the Internet unlocked the true potential of personal computers, the emergence of the MCP gives us a strong sense of déjà vu. We believe that in the era of AI, the MCP will empower large language models as the Internet once did to personal computing — it will ultimately unleash the full potential of AI and elevate its capabilities to a brand new level. As this ecosystem matures, we can expect increasingly sophisticated applications that combine the reasoning capabilities of LLMs with seamless access to specialized tools and vast data resources.

If you’re building the next generation of AI-native applications, or if you’re an API provider looking to scale your distribution, our MCP Hub opens a new chapter in how AI services are built, accessed, and monetized.

To explore NetMind ParsePro, access other services, or onboard your own, head to: https://www.netmind.ai/AIServices to find out more.