This guide uses our DeepSeek-R1-0528, cheapest DeepSeek on the market, as an example.

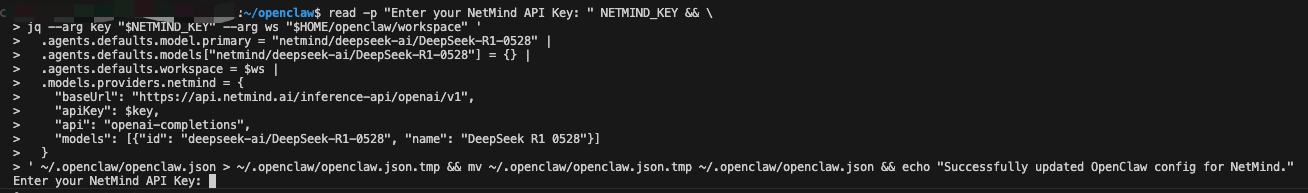

This script uses jq (a common JSON processor) to safely inject the configuration into the existing openclaw.json. It prompts the user for their API key and automatically detects the workspace path.

read -p "Enter your NetMind API Key: " NETMIND_KEY && \

jq --arg key "$NETMIND_KEY" --arg ws "$HOME/openclaw/workspace" '

.agents.defaults.model.primary = "netmind/deepseek-ai/DeepSeek-R1-0528" |

.agents.defaults.models["netmind/deepseek-ai/DeepSeek-R1-0528"] = {} |

.agents.defaults.workspace = $ws |

.models.providers.netmind = {

"baseUrl": "https://api.netmind.ai/inference-api/openai/v1",

"apiKey": $key,

"api": "openai-completions",

"models": [{"id": "deepseek-ai/DeepSeek-R1-0528", "name": "DeepSeek R1 0528"}]

}

' ~/.openclaw/openclaw.json > ~/.openclaw/openclaw.json.tmp && mv ~/.openclaw/openclaw.json.tmp ~/.openclaw/openclaw.json && echo "Successfully updated OpenClaw config for NetMind."

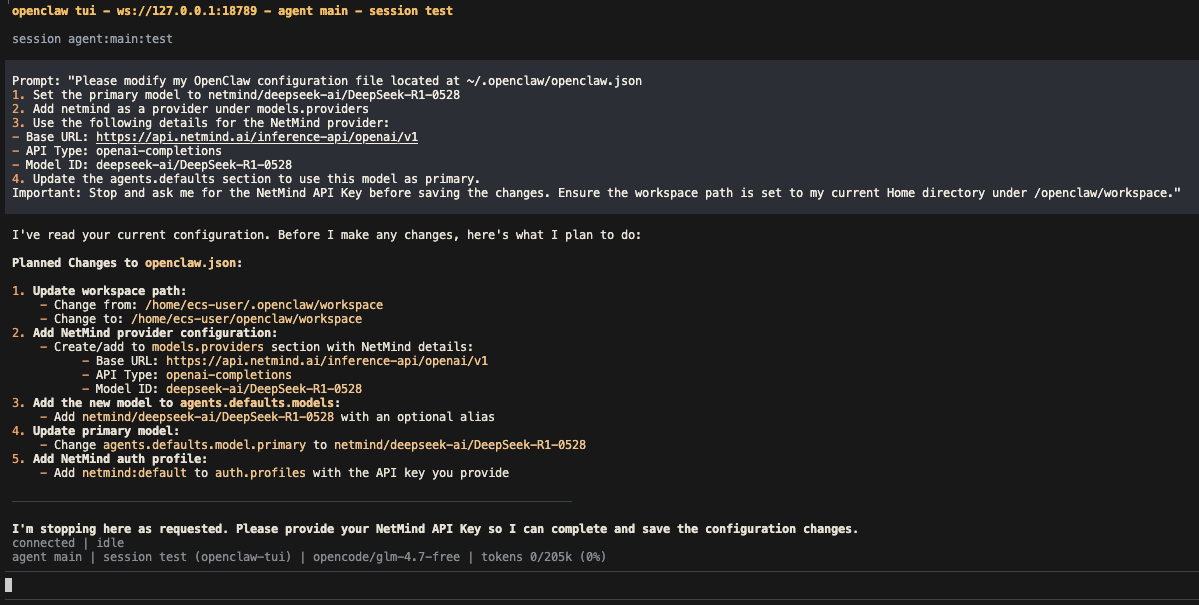

If you want to tell a Coding Agent to do this for you, use the following prompt:

Prompt: "Please modify my OpenClaw configuration file located at ~/.openclaw/openclaw.json

1. Set the primary model to netmind/deepseek-ai/DeepSeek-R1-0528

2. Add netmind as a provider under models.providers

3. Use the following details for the NetMind provider:

-Base URL: https://api.netmind.ai/inference-api/openai/v1

-API Type: openai-completions

-Model ID: deepseek-ai/DeepSeek-R1-0528

4. Update the agents.defaults section to use this model as primary.

Important: Stop and ask me for the NetMind API Key before saving the changes. Ensure the workspace path is set to my current Home directory under /openclaw/workspace."

Happy building!