SGLang is a well-known open-source platform for deploying large language models. However, we discovered that DeepSeek models deployed through SGLang could not invoke external function calling. In a recent commit to SGLang, our engineering team at NetMind resolved this issue, making this new feature benefits all users.

SGLang is a popular open‑source platform for serving large language models and vision‑language models at scale. It offers a seamless, API‑compatible interface that lets developers deploy inference workloads with minimal effort. With its lightweight runtime and intuitive scripting language, SGLang has become a go‑to choice in both research and production environments.

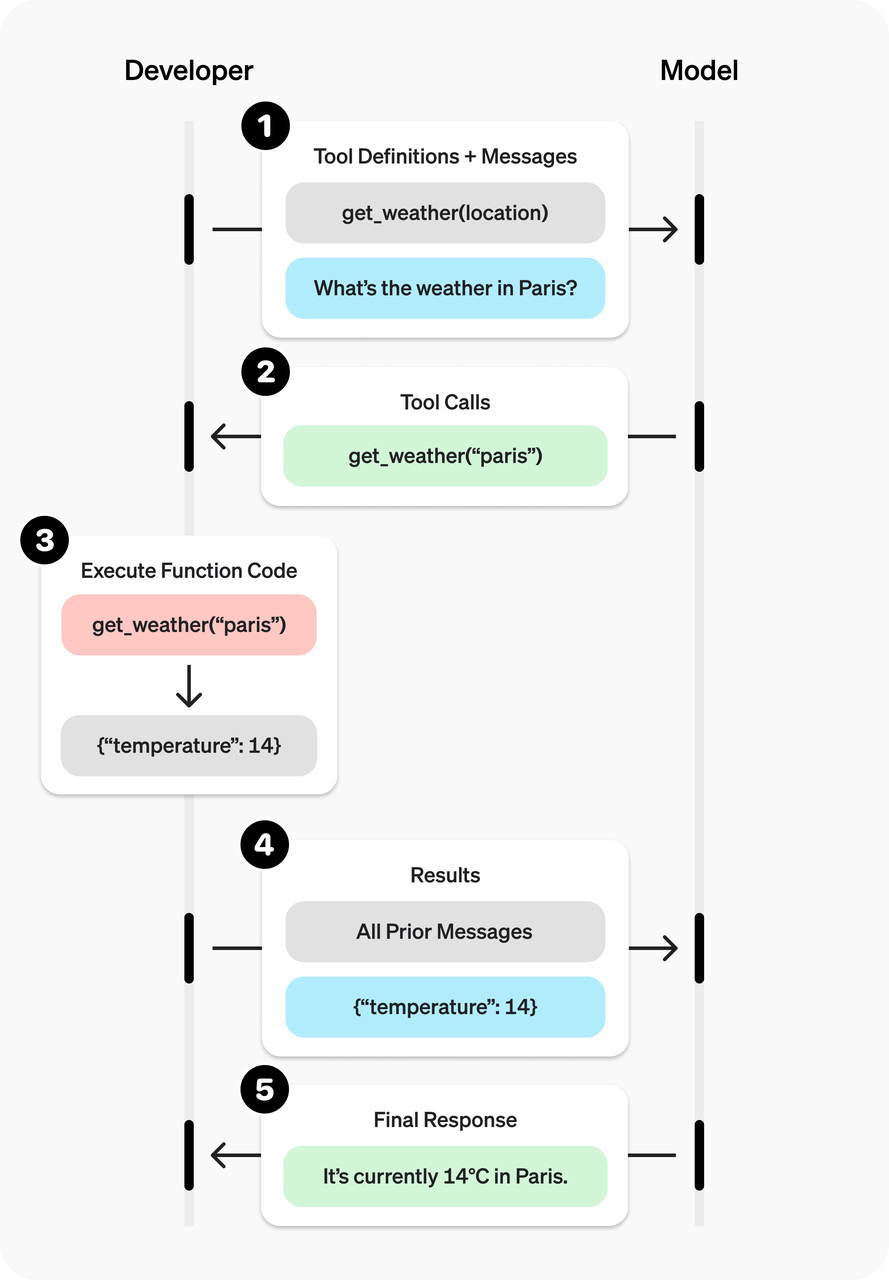

However, one important limitation to note is that when you deploy the DeepSeek series models through SGLang, they do not support function calling. Function calling lets you register tools (functions) for LLMs and have the model decide which one to invoke by outputting a JSON object with the right parameters. This feature is a key component in latest LLMs as it enables LLMs to query or even modify external data. For example, for a simple question as--What is the weather like in Boston today? Only with function calling can LLMs have access to current date and information about the weather.

The illustration of function calling for OpenAI models.

Upon discovering this issue, a recent pull request (#5224) from our engineering team merged into the SGLang main branch has newly included a dedicated function‑call parser for DeepSeek models. It includes a new parsing module and an updated chat template (“deepseek2.jinja”) for DeepSeek-V3, which achieved a 100% success rate in automated function‑call tests compared to just 45% with the legacy template. Contributors agreed to adopt the official V3 chat template, resolved earlier token‑ID errors, and updated documentation to the V3 tokenizer config. After multiple continuous integration runs and reviews by the core team, the changes were approved and integrated.

We hope this new feature will empower developers to seamlessly integrate DeepSeek-V3 and R1 into their applications, unlocking more dynamic, context‑aware interactions with LLMs. We can’t wait to see the innovative use cases our community will create.

NetMind is an AI infrastructure and services company focused on making large language models easier to use and deploy. We provide flexible model inference APIs, AI service APIs, and their Model Context Protocol (MCP) version— an open standard for connecting models with tools and services.

Beyond APIs, we also offer infrastructure solutions, private deployment options, and AI consulting to help businesses build reliable, production-ready AI systems.

This contribution to SGLang is part of our ongoing effort to support the open-source ecosystem and help more teams unlock the full potential of LLMs.

Learn more at www.netmind.ai.